Rolling out new guides and information to help employees is one thing. It’s quite another to have them successfully transform behaviour and lead to successful changes in behavior.

When guides are meant to train or help, the first question to always be asked is “is the guide successful?” If it’s not, then something was done correctly. Luckily, many Digital Adoption Platforms (DAPs) have tools that help track whether a guide is helping guide people or being ignored.

The tools available all depend on them being used, though. This post will focus on what adoption of a guide really means and how to measure if in-app help is successful.

Adoption is more important than usage of an in-app guide.

Contextual help isn’t simply about providing information; it’s about helping people do their job better. This post will help you reframe adoption for in‑app help so it actually ties to user behavior, business goals, and real learning impact.

The Myth: High Views = High Adoption

Just like instructor-led training, butts in seats is meaningless. Our goal in training isn’t to have people simply consume content passively and not gain anything.

Action taken is the true measure of success. If employees don’t take action after a training session, then it’s not a success. Equally, not taking action after seeing or using a guide is meaningless.

In‑app help is often mistakenly evaluated the same way people evaluate marketing: clicks, impressions, open rates, and step completions. But those metrics only tell you that a user interacted with your guide, not that the guide worked.

Here’s the truth:

A guide is only successful if the user completes the intended action after seeing it.

Everything else is just noise.

Just like training, if someone attends a class but can’t perform the task afterward, we don’t congratulate ourselves on attendance. We fix the training.

At least that’s how it should work in a meaningful world rather than a self-congratulating world.

The Shift: From “Who Opened It?” to “What Happened Next?“

Once you stop evaluating guides based on engagement, a more useful question emerges:

“What should a user be able to do after seeing this guide?“

This single question is the heartbeat of measuring in‑app help adoption.

None of these things matters:

- “Did they read it?”

- “Did they click through it?”

Neither of those matters much at all.

What matters?

“Did they complete the task?”

Because in‑app help isn’t just part of the UX, it’s micro‑training delivered precisely when the user needs it. It’s not about using the application in general; it’s often about performing a specific task, just like performance support in training is about.

And every piece of training needs a clear learning outcome. Better yet, every piece of training needs a clear performance objective.

Setting a Goal for Every Guide (The Non‑Negotiable)

Before you write a word of a guide or place a step on a screen, define its goal. And I don’t mean vague aspirations like “help onboarding” or “reduce confusion.” I mean a concrete, observable action the user should complete shortly after engaging with the guide.

For example:

- Completing a profile setup

- Submitting a form

- Running a workflow

- Activating an integration

- Saving changes to a configuration

A good goal is:

- Observable: You can see the result through a trackable action.

- Measurable: The system can confirm it happened.

- Immediate: The action occurs soon after the guide is used.

- Meaningful: It advances the user’s ability to use the product successfully.

A guide without a goal is just decoration. This might be necessary in some cases, but it should not be the rule. Guides that provide information are inevitable, but they should be fewer than guides with concrete goals.

A Simple Framework: Guide → Completion → Goal

Once goals are clear, success becomes more measurable and more meaningful.

Here’s the flow we look for:

- Guide Trigger: The right users see the guide at the right time.

- Guide Completion: They follow the necessary steps or action (sometimes it’s just one action!)

- Goal Completion: They perform the intended task.

That last step, goal completion, is where adoption truly lives.

This creates a story: A user saw the guide → engaged with it → did the thing it was meant to help them do.

That means it helped make a real impact, and users actually paid attention and were able to benefit from the guide. Everything else in the analytics is supporting detail.

Measuring Success: The Metrics That Actually Matter

Let’s be clear: views and clicks matter only in diagnosing why a guide may not be leading to the outcome. They don’t define success.

Here’s what does.

Goal Conversion Rate (GCR)

How many users performed the target action after seeing the guide?

If 300 users saw the guide, and 150 completed the task, your GCR is 50%.

You have to work out in advance what the GCR should be for each guide. Deciding what success looks like beforehand helps you measure its success more clearly.

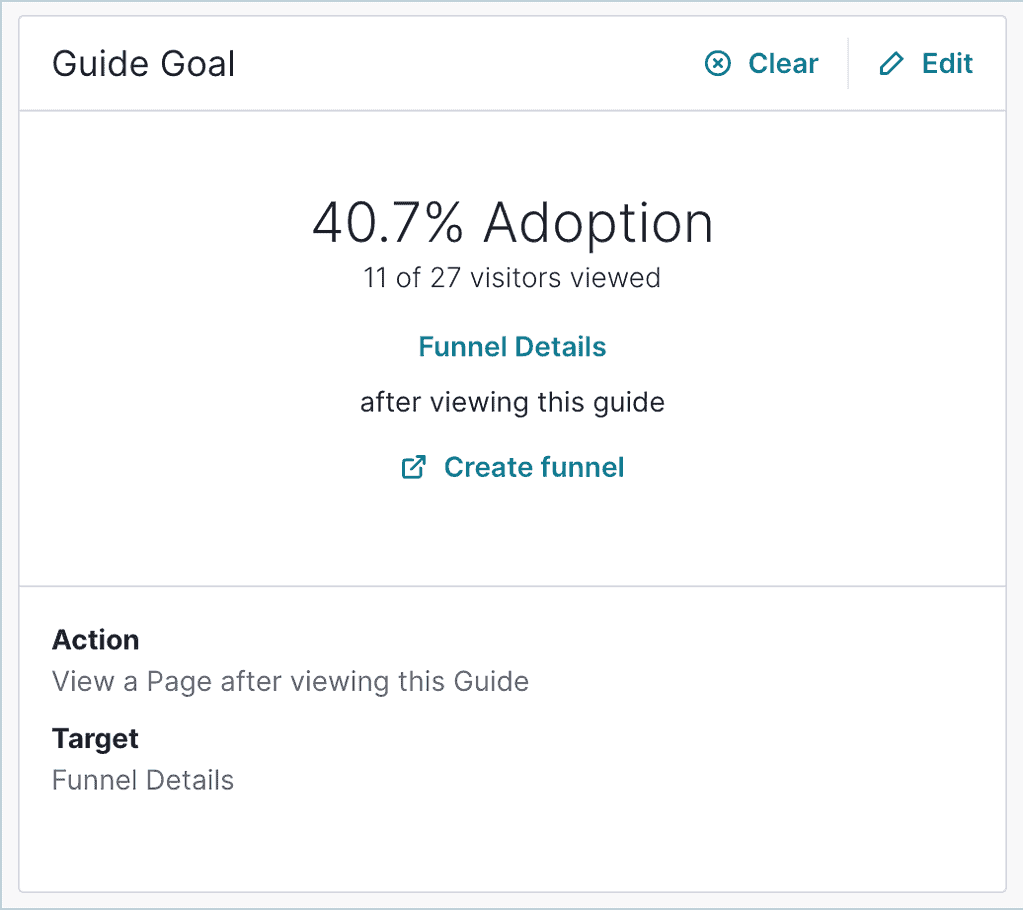

The adoption rate of a guide is similar to a GCR. We use Pendo for much of our in-app work, and nearly every guide gets a guide goal set up. That means the learning purpose of the guide is translated into action.

If someone sees a guide, there’s an action they should take after seeing it. If they meet that goal, then the information/goal is being adopted. Here’s what some of those outcomes might look like in Pendo.

Increase Compared to a Control Group

Did the guide actually change behavior compared to users who didn’t see it?

If users who saw a guide completed the task at 50% and the control group only completed it at 34%, that’s a meaningful increase. This is easier to quantify than a GCR because you don’t have to determine what success looks like.

Time‑to‑Action (TTA)

How quickly do users complete the task after seeing the guide?

If a guide reduces a 2‑day delay to a few hours, that’s real improvement. This one is sometimes more difficult to quantify, especially if a tool doesn’t make it easy or even possible.

Error Reduction

Did the guide lower mistakes, bounced attempts, or repeated confusion?

This one might also be fairly difficult to manage and likely will require input from various data teams beyond just the guide itself.

Support Deflection

Are fewer people asking the same question after the guide goes live?

There are lots of ways to determine support deflection. Sometimes, some creativity must be used, and it’s not always an exact science. It’s all about making a plan ahead of time rather than trying to look at the data after the fact.

Notice what’s missing from this list?

Views. Step completions. Clicks.

Those aren’t success, they’re diagnostic breadcrumbs. Yes, they can be used in a bigger story, but more information is required. Not only is more information essential, but defining the goal and what success looks like from the beginning is essential.

Diagnosing When a Guide Isn’t Working

There are a lot of subtleties baked into the analytics of in-app guides. While the numbers can only tell you so much, they do tell a story if you look deep enough and take the time to uncover the story.

Here are some metrics we do look at and what they sometimes mean. They help troubleshoot without getting lost in vanity metrics:

- Low guide views? Wrong placement or weak targeting.

- Low guide completion? Guide too long, unclear, or poorly timed.

- Low goal completion? The guide isn’t solving the real problem, and/or is too long.

- Slow time-to-action? Hidden friction in the workflow.

Analytics don’t just measure success; they tell a story about what the user needs and if the guide is effective.

What Adoption Really Means

In‑app help adoption isn’t about:

- How many people opened the guide.

- How many steps they clicked through.

- How many times the guide triggered.

Adoption means:

Users consistently perform the intended task more often, more accurately, and more quickly after seeing the guide.

That’s training adoption. That’s behavior change. And that is success you can measure, report, and celebrate.

Wrap Up

It’s easy to think of in‑app help as just another layer of UI providing information to users. It’s so much more than that. It’s training delivered at the moment of need. And like all good training, it must be tied to a clear outcome.

When you design each guide with one specific goal, track the behavior that follows, and determine if a guide is successful, you uncover the truth:

A successful guide doesn’t get attention.

A successful guide gets action.

That’s adoption. That’s impact. And that’s how in‑app help earns its place in the product experience. That’s how it adds value to the organization and the success of the application, whether commercial or internal.

We work with in-app guides for training and help quantify their success in real numbers rather than vanity metrics. Schedule a consultation to learn how we can help your organization train employees more effectively with contextual help and quantify those efforts.